China makes first ChatGPT-related arrest for fake news

A man from Gansu has been arrested after allegedly using ChatGPT to generate and spread a fake news story about a fatal train crash. The incident comes just weeks after Chinese lawmakers proposed a new legislation on generative AI with a focus on content regulation.

China has made its first-known arrest related to the use of ChatGPT, the wildly popular generative artificial intelligence chatbot that has been making headlines around the world. The arrest comes less than a month after the Cyberspace Administration of China released draft rules for ChatGPT-type platforms that specify that generative AI must not produce content that subverts the state.

According to a statement released on Sunday by police in the northwest province of Gansu, the alleged suspect — identified only by his surname, Hóng 洪 — was accused of using the AI-driven text generator to create news articles described as “false information” by the authorities.

The particular story that caused trouble for Hong described a fatal train crash that killed nine people in Gansu. The matter first caught the attention of the cyber division of a county police bureau when articles about the supposed accident began appearing online in April. When investigating the case, the police found that various versions of the same story but said to have happened in different accident locations were posted through more than 20 accounts on Baijiahao, a blog-style platform run by Chinese tech giant Baidu. The posts garnered a total of 15,000 views before being removed.

Investigators managed to locate Hong’s social media company based in the southern city of Dongguan in Guangdong Province and made the arrest on May 5. Under police questioning, the suspect confessed that the train crash story was generated by ChatGPT after he provided prompts about trending news stories in China from the past few years, and that different versions of the article were made to bypass Baijiahao’s duplication check function.

“Hong used modern technology to fabricate false information, spreading it on the internet, which was widely disseminated,” the Gansu police said in the statement, adding that the investigation was still ongoing.

What exactly landed Hong in trouble?

Hong was held under the suspicion of “picking quarrels and provoking trouble” (寻衅滋事 xún xìn zī shì). This is a catch-all charge that is traditionally used against political dissidents and carries a maximum prison sentence of 10 years. While the popular understanding of the term is that it can be applied to any behavior welcomed by the Chinese government, Donald Clarke, a professor of law specializing in modern China at the George Washington University Law School in Washington, told The China Project that “it’s not as vague as everybody says.”

“The crime is only made out if one of four conditions is met, and one of them is about creating a disturbance in a public place,” he said, adding that there are precedent instances of the charge being applied to suspects accused of creating or spreading misinformation online, as “the Chinese government has very often now interpreted cyberspace as public space.”

“The government has used it many times to prosecute people who did something they didn’t like in cyberspace. And it’s not just fake news. It could be true news. It could be a critical opinion about the leaders. There’s lots of cases where people have been prosecuted for dissemination of unwelcome information online and Chinese officials’ favorite way to attack is by calling it ‘picking quarrels and provoking trouble,’” Clarke added.

Known for its ability to generate human-like text and content in response to complex questions, simple prompts, and other human input, ChatGPT has been a worldwide sensation since its launch last November. However, despite being a popular subject of fascination in China, the tool — like many other major foreign websites and internet applications — is blocked by the sophisticated censorship system colloquially known as the Great Firewall.

Individuals determined to access the blocked service can get around the restrictions using “virtual private network” (VPN) software, a practice that is also punishable by Chinese security and legal authorities. There has been no official word on how Hong managed to use ChatGPT, but according to Jeremy Daum, a senior fellow of the Yale Law School’s Paul Tsai China Center and the founder of China Law Translate, OpenAI’s powerful chatbot was not the main factor leading to Hong’s arrest.

“Had he made up the story the old-fashioned way, the results would be the same. The tech aspect may make the story more interesting, or even useful as a way of drawing attention to the emerging rules, but isn’t really key here,” Daum told The China Project. “AI generation reduces the effort and cost of creating such content, but the content has always been illegal.”

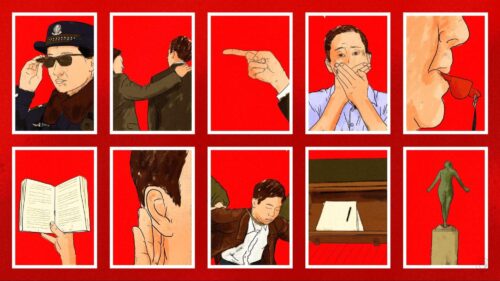

For the Chinese government, cracking down on online content is a measure routinely used to censor information it doesn’t like.

Information control is particularly intense when it comes to major disasters: When a deadly fire broke out at a Beijing hospital last month, social media posts about the incident were quickly censored and it took hours for the news to reach state-approved news outlets. Making up stories about train accidents appears to be a dangerous act, especially after the fatal Wenzhou train collision in 2011, which prompted a public furor over a lack of transparency in how the news was communicated.

According to Clarke, the arrival of ChatGPT has made it harder for Chinese censors to remove unwelcome stories and curb their circulation. “Hong could have rewritten the story 100 times himself, but it takes a lot of time. He could have hired 100 people to do it for him, but that takes money,” he said. “The thing that ChatGPT allows you to do is to bypass the censoring mechanism by rewriting the same unwelcome story in many different ways.”

Making sense of China’s AI regulations

In the police statement, it’s acknowledged that the arrest of Hong was the first instance of a Chinese citizen being detained since China’s new regulations on “deepfake” technology took effect in January. Formally known as “Provisions on the Administration of Deep Synthesis Internet Information Services” and drafted by the Cyberspace Administration of China (CAC), the legislation is China’s first attempt to govern deepfakes, which is defined by Chinese lawmakers as technologies that use “deep learning, virtual reality, and other synthetic algorithms to produce text, images, audio, video, virtual scenes, and other network information.”

While the rapidly evolving deep synthesis technology could “serve user needs and improve user experience, it has also been used by some unscrupulous people to produce, copy, publish, and disseminate illegal and harmful information, to slander and belittle others’ reputation and honor, and to counterfeit others’ identities,” the CAC explained in its introduction to the new measures.

Although officials promoted the rules in the name of protecting individual internet users’ interest, and maintaining social stability, some analysts were quick to point out that they imposed tighter censorship of online information. For example, one article demands that deepfake makers and distributors must adhere to “the correct political direction” and “values trends” in the country, which automatically puts any content deemed offensive by the government in tricky territory.

Building on the deep synthesis legislation, the CAC unveiled a new set of draft guidelines on generative AI services like ChatGPT in April, closing public consultation for them on Wednesday this week. “Inappropriate” content — once again — is an issue highlighted in the proposed law, which requires AI developers to ensure generated content embodies “the core values of socialism” and must not “incite subversion of state power” or undermine national unity.

The draft rules also “baldly state that the content created by generative AI must be truthful and accurate,” Daum pointed out in his overview of the document. But truth and accuracy, Daum said, “isn’t always a relevant standard for content no matter how it is generated.”

“Is the Mona Lisa true and accurate? How about Mondrian’s ‘Composition with Red, Blue, and Yellow?’ Sometimes an image, sound, or other media is about aesthetics, and trying to measure or mandate its truth just doesn’t make sense,” he added. “What they are hoping to do in new rules on AI-generated content is limit the deception and confusion of the public. This has to do more with how the content is presented or used than with the content itself.”

Elsewhere in the proposed legislation, there is a section dedicated to addressing algorithmic bias, which contains an article ordering Chinese companies to ensure that training data for AI models will not discriminate against people based on their ethnicity, race, or gender. References to intellectual property rights and fair competition can also be found in the document.

In an effort to catch up to ChatGPT, Chinese tech companies have been barreling into the field of generative AI in the past few months, releasing rival products to mixed reviews. In March, search engine giant Baidu unveiled its much-awaited AI-powered chatbot known as Ernie Bot, but the use of pre-recorded demonstration videos at the showcase disappointed viewers and investors, sending Baidu’s Hong Kong–traded stock price plunging. Last month, Chinese tech behemoth Alibaba announced plans to roll out its own ChatGPT-style service called Tongyi Qianwen. The company said that it would integrate the chatbot across its businesses in the “near future,” but did not give details on its timeline.

China is not the only country concerned with the development of generative AI. In March, Italy became the first country to ban ChatGPT over privacy concerns, although it has since walked the prohibition back. The European Union has proposed a comprehensive legislative package to bring AI under control, while talks about accountability measures for the technology are also underway within the U.S. government.

But there’s a unique emphasis on content control and censorship in the Chinese government’s push to rein in AI.

“China already has ample rules for censoring or punishing speech it doesn’t like, regardless of how it was generated, and the deep synthesis rules have already incorporated existing content law into the content-generation context,” Daum said. “The big change in the draft AI rules is that they are aiming to go after the providers of generative AI services as well as the user who spreads the information.”